Auric_

Junior Member level 2

Usually, the manufacturer gives standard recommended schemes in the datasheets for the ADC (in this case, for the sigma-delta ADC), and even recommends the values of the elements in the filters (to suppress differential and common mode noise), but I would like to read an alternative opinion. Moreover, there is an alternative in the ready-made circuits of devices that I came across, I just doubt these solutions and I would like to read the opinion of my colleagues about these "non-standard solutions" in order to understand the pros and cons.

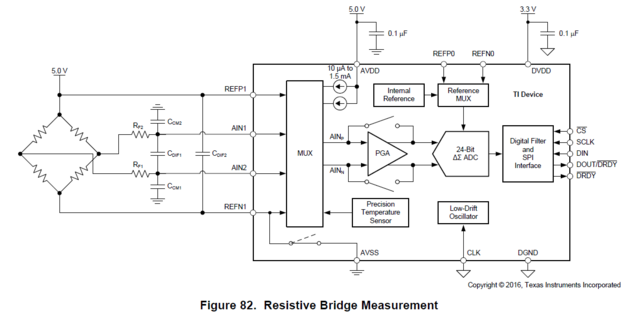

Usually in weight measurement, the bridge is connected to 5V (sensor excitation), the reference voltage to the ADC is supplied from this power supply, since the measurement is ratiometric, the stability of the supplied voltage does not affect the ADC readings within certain limits (for example, temperature drift in the power supply).

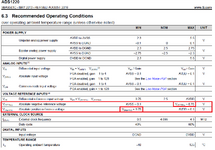

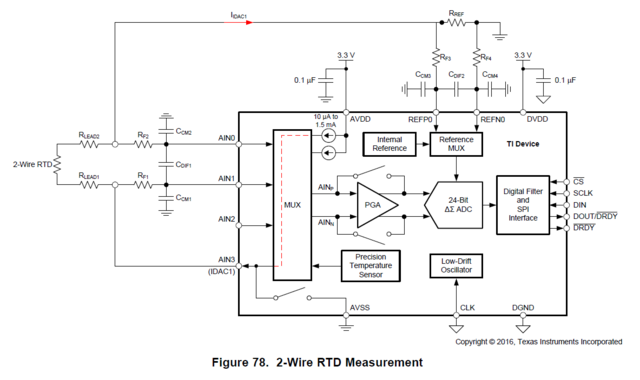

According to the Texas Instruments circuits on the ADS1220, the reference voltage is connected only with a differential filter (capacitor 90-100nF), the input measuring signal comes with RC + differential (capacitor).

Hence two questions:

1. Considering that strain gauges are calibrated anyway, and the transfer coefficient is on average 2mV / V, which indicates that the reference at 5V will be large enough compared to the useful measured signal, which is less than 10mV, how useful / bad is it to take the reference voltage from the divider, bringing it closer to the measurable? I would like to hear all the pros and cons, if you also used this (well, as I understand it, and I saw this in the circuits, they probably wanted to get more accuracy by scaling the reference, leaving the dependences on the input for compensation, but at the same time getting a greater voltage attributable per digit - discrete).

2. for different circuits, the signal to the reference voltage is supplied from the outside, for the thermal resistance from the shunt, for the load cell - from the sensor power supply, but anyway it is "dirty" here and there, because the conductors are located "in the field", that is, interference characteristics identical for different circuits, I personally did not find a difference. So, in order to understand where filters can not be used, and where they are needed, I want to read the opinions of my colleagues, for example, for thermistors in the reference receiving circuit, in addition to the differential capacitor, capacitors are also used from common mode interference (each wire of the reference connected through the capacitor to ground) as part of RC -filter, and for strain measurement, the manufacturer only needs a differential capacitor in the circuit, which makes me misunderstand why the measures are so different under the same initial conditions. The choice of elements is not entirely clear, in one case there are no resistors in the reference voltage circuits, in the other case there are.

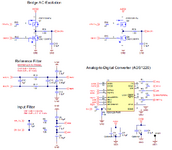

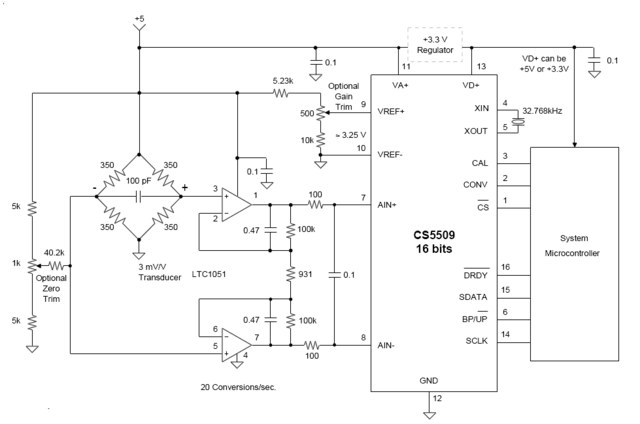

Here is an alternative circuit, where there are dividers and no reference filters, and this happens

Usually in weight measurement, the bridge is connected to 5V (sensor excitation), the reference voltage to the ADC is supplied from this power supply, since the measurement is ratiometric, the stability of the supplied voltage does not affect the ADC readings within certain limits (for example, temperature drift in the power supply).

According to the Texas Instruments circuits on the ADS1220, the reference voltage is connected only with a differential filter (capacitor 90-100nF), the input measuring signal comes with RC + differential (capacitor).

Hence two questions:

1. Considering that strain gauges are calibrated anyway, and the transfer coefficient is on average 2mV / V, which indicates that the reference at 5V will be large enough compared to the useful measured signal, which is less than 10mV, how useful / bad is it to take the reference voltage from the divider, bringing it closer to the measurable? I would like to hear all the pros and cons, if you also used this (well, as I understand it, and I saw this in the circuits, they probably wanted to get more accuracy by scaling the reference, leaving the dependences on the input for compensation, but at the same time getting a greater voltage attributable per digit - discrete).

2. for different circuits, the signal to the reference voltage is supplied from the outside, for the thermal resistance from the shunt, for the load cell - from the sensor power supply, but anyway it is "dirty" here and there, because the conductors are located "in the field", that is, interference characteristics identical for different circuits, I personally did not find a difference. So, in order to understand where filters can not be used, and where they are needed, I want to read the opinions of my colleagues, for example, for thermistors in the reference receiving circuit, in addition to the differential capacitor, capacitors are also used from common mode interference (each wire of the reference connected through the capacitor to ground) as part of RC -filter, and for strain measurement, the manufacturer only needs a differential capacitor in the circuit, which makes me misunderstand why the measures are so different under the same initial conditions. The choice of elements is not entirely clear, in one case there are no resistors in the reference voltage circuits, in the other case there are.

Here is an alternative circuit, where there are dividers and no reference filters, and this happens