mtwieg

Advanced Member level 6

- Joined

- Jan 20, 2011

- Messages

- 3,907

- Helped

- 1,311

- Reputation

- 2,628

- Reaction score

- 1,435

- Trophy points

- 1,393

- Activity points

- 30,018

I'm trying to design a fully differential IF amplifier in 0.5um On semi process, and its input referred noise voltage is going to be critical in the system's overall performance. The source impedance is low, in the range of 50-1000 ohms, so to keep NF<3dB I'll need vg on the order of 1-2nV/√Hz. The operating frequency range is around 200kHz-2MHz, so flicker noise isn't a huge issue, and power consumption isn't critical either.

This is my first time doing a CMOS LNA at low frequencies, so I'm trying to get a feel for how to approach this. Most of the literature I'm finding either deals with RF frequencies, or very high source impedances and therefore higher tolerable vg noise. From my shallow understanding of device noise, my goal should just to boost the gm of my input devices as much as possible. I've run simulations on some simple circuits and I can get around 3nV/√Hz by just using huge devices and hitting them with lots of bias current (like w/l=800/3um and Ids=1ma). Simulations indicate that my overall noise is dominated by the input diff pair. Switching between nmos or pmos devices doesn't seem to make a huge difference, for some reason.

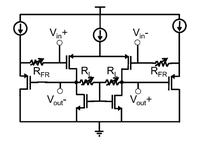

Here's a schematic of the simple circuit I'm simulating. RL is for CMFB while Rfr is for tuning gain.

I actually wouldn't mind just scaling size and gm up even higher, but my space is somewhat limited, and I'm wondering if there's anything more clever than just throwing power and size at the problem.

Also I know that BJTs would probably be ideal for this, but I don't think they are available for my process, at least certainly not in my NCSU model kit.

This is my first time doing a CMOS LNA at low frequencies, so I'm trying to get a feel for how to approach this. Most of the literature I'm finding either deals with RF frequencies, or very high source impedances and therefore higher tolerable vg noise. From my shallow understanding of device noise, my goal should just to boost the gm of my input devices as much as possible. I've run simulations on some simple circuits and I can get around 3nV/√Hz by just using huge devices and hitting them with lots of bias current (like w/l=800/3um and Ids=1ma). Simulations indicate that my overall noise is dominated by the input diff pair. Switching between nmos or pmos devices doesn't seem to make a huge difference, for some reason.

Here's a schematic of the simple circuit I'm simulating. RL is for CMFB while Rfr is for tuning gain.

I actually wouldn't mind just scaling size and gm up even higher, but my space is somewhat limited, and I'm wondering if there's anything more clever than just throwing power and size at the problem.

Also I know that BJTs would probably be ideal for this, but I don't think they are available for my process, at least certainly not in my NCSU model kit.

Last edited: