r.mirtaji

Junior Member level 3

i designed an Eight-bit digital-to-analog converter based on MOSFET

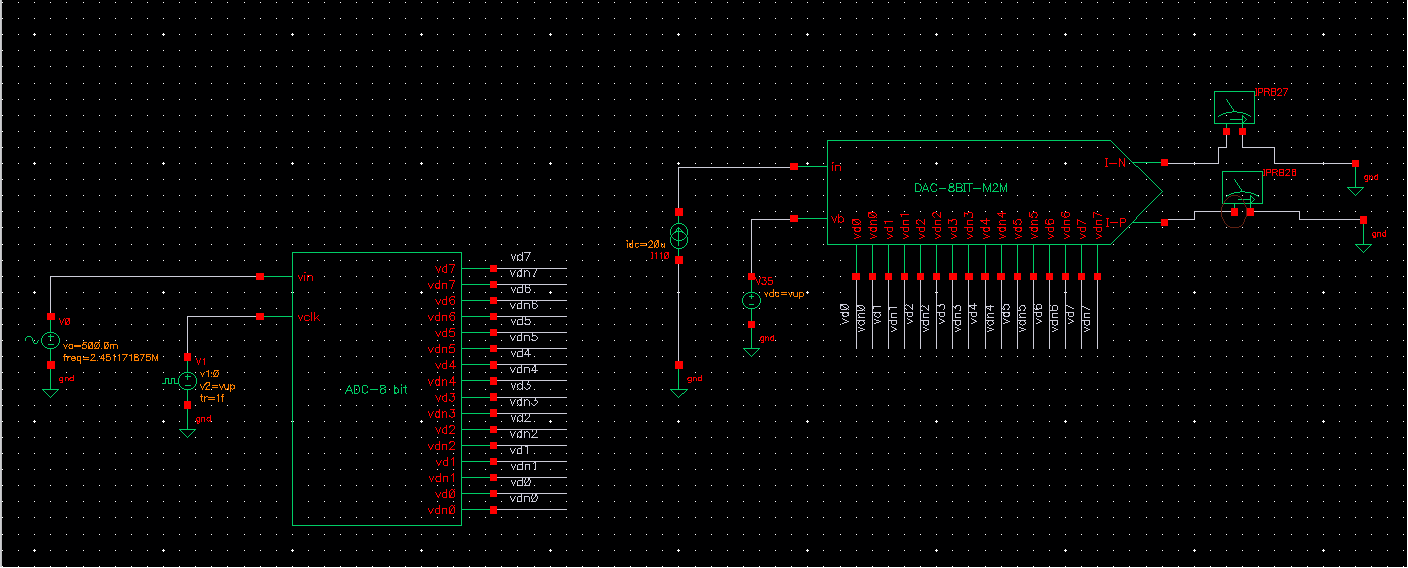

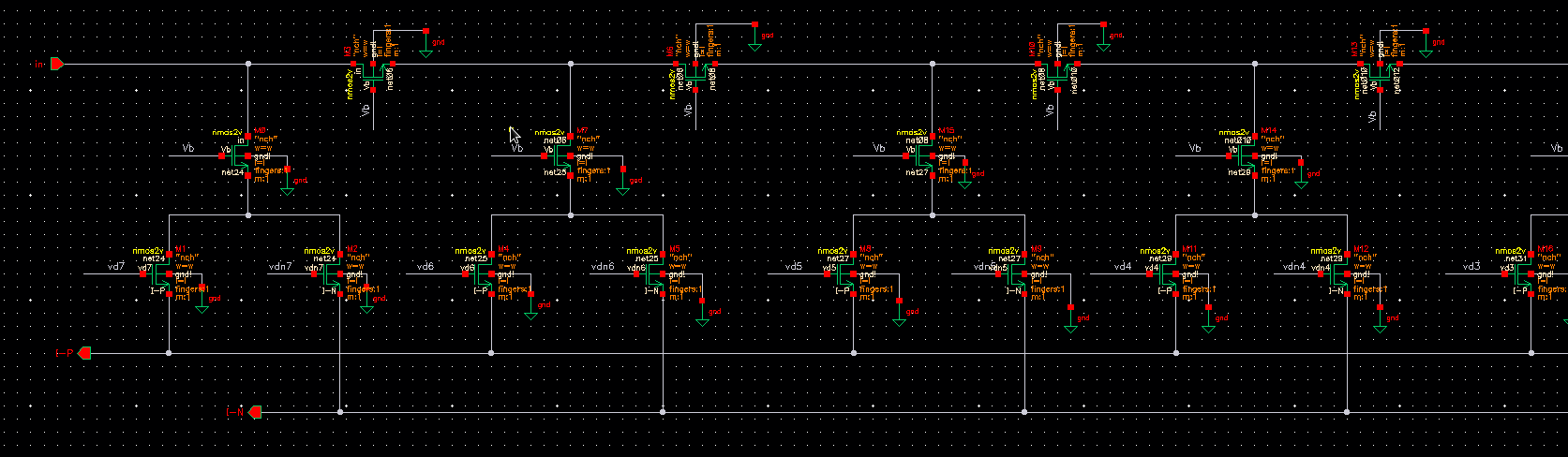

In order to check the dynamic performance of the converter, I have used the following circuit

The characteristics of the converter are as follows:

Fin=2.451171875000000e+06 (input frequency)

Fs=10M (sample frequency)

N=1024; (Number of sample)

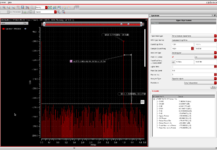

The dynamic characteristic is obtained as follows

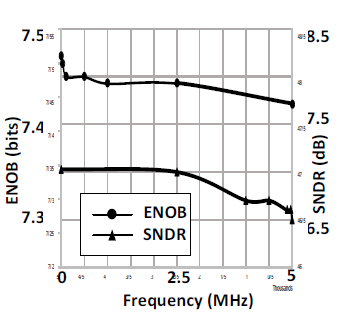

Fin=2.5M ====><<ENOB=7.9859418 , SINAD=49.83831 , SFDR=66.166519>>

When I change the input frequency, the dynamic characteristic does not change and is almost constant!

Fin=4M ====><<ENOB=7.9859418 , SINAD=49.83831 , SFDR=66.166519>>

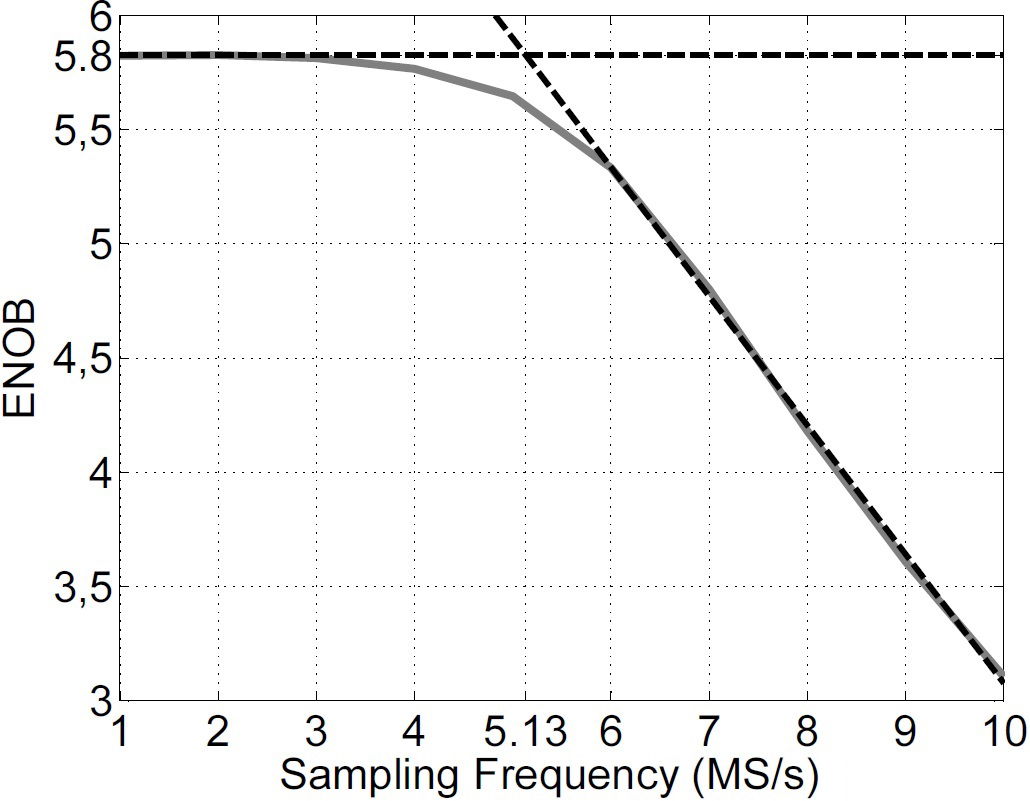

1-Shouldn't the ENOB and SFDR decrease by increasing the input frequency?

2-To express the performance of the converter, do we draw the graph of

the ENOB according to the frequency or the ENOB according to the sampling frequency

Why doeas the ENOB and SFDR not decreased with increasing frequency?

The converter circuit is as follows

In order to check the dynamic performance of the converter, I have used the following circuit

The characteristics of the converter are as follows:

Fin=2.451171875000000e+06 (input frequency)

Fs=10M (sample frequency)

N=1024; (Number of sample)

The dynamic characteristic is obtained as follows

Fin=2.5M ====><<ENOB=7.9859418 , SINAD=49.83831 , SFDR=66.166519>>

When I change the input frequency, the dynamic characteristic does not change and is almost constant!

Fin=4M ====><<ENOB=7.9859418 , SINAD=49.83831 , SFDR=66.166519>>

1-Shouldn't the ENOB and SFDR decrease by increasing the input frequency?

2-To express the performance of the converter, do we draw the graph of

the ENOB according to the frequency or the ENOB according to the sampling frequency

Why doeas the ENOB and SFDR not decreased with increasing frequency?

The converter circuit is as follows