dany.1986

Junior Member level 1

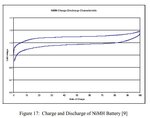

Hello everyone, I am currently working on a solar charger project (to charge a NiMH battery which has a nominal voltage of 1.2 V and 1300 mA capacity) and I have come across some problems that I don't fully understand. My solar panel provides 2.14 V and 1.4 A. However, when I insert the battery, the solar panel voltage drops to 1.5 V (it is actually better for the battery under charge as the maximum charge voltage is 1.5 V as stated in the datasheet). Can someone explain me why the voltage drop happens? Do I also need a diode to protect the solar panel against reverse current from the battery or the MOSFET transistors give this protection? Below is the circuit. Thanks.