weetabixharry

Full Member level 4

I have a source-synchronous input to my FPGA, coming from an external chip whose datasheet gives the following information:

fmax = 300 MHz (single data rate)

tsetup = 0.4 ns

thold = 0.5 ns

Using an oscilloscope, it is clear that the data is "center-aligned" (such that the data values are stable near the rising edge of the clock). Therefore, my interpretation of the datasheet is that the data is guaranteed to be stable for 0.9 ns per clock cycle (from 0.4 ns before the rising edge of the clock, until 0.5 ns after).

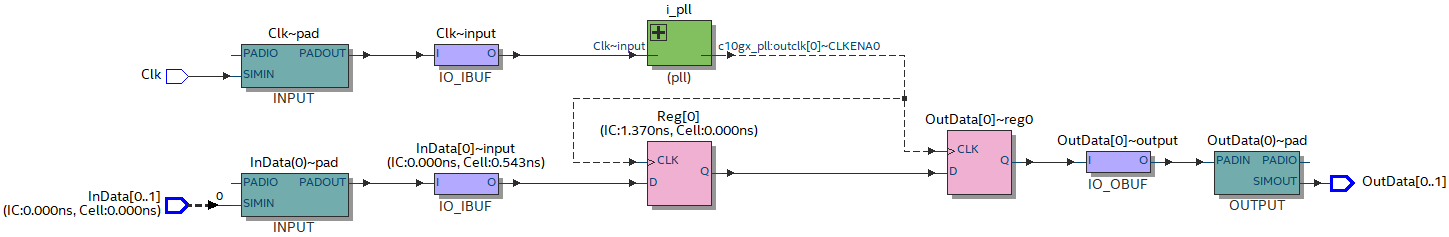

I don't think my question is really vendor-specific, but I am targeting an Intel Cyclone 10 GX (part 10CX085). I have stripped my whole design down to two flip-flops (pink blocks below) and I still struggle to meet timing, even if I heavily relax the timing constraints:

By closely following Intel's free training course, I understand that for best timing performance I should instantiate a PLL (specifically, an IOPLL - green block above) and my timing constraints should be defined like this:

This failed timing catastrophically, so I tried relaxing to a 10 MHz clock with an extremely generous thold = 25 ns:

It surprised me that this also failed timing. Since the data is stable for >25 ns and I have told the tools when that stable time will be (relative to the phase of the 10 MHz clock), I thought maybe the tools would be able to adjust delays (and/or PLL phase) to ensure the data is sampled near the middle of the "stable" time.

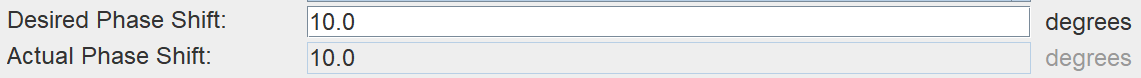

However, if I manually adjust the phase of the PLL (to basically anything sufficiently larger than zero) then timing is of course met comfortably:

This leaves me very confused about the following:

The Quartus project is attached...

fmax = 300 MHz (single data rate)

tsetup = 0.4 ns

thold = 0.5 ns

Using an oscilloscope, it is clear that the data is "center-aligned" (such that the data values are stable near the rising edge of the clock). Therefore, my interpretation of the datasheet is that the data is guaranteed to be stable for 0.9 ns per clock cycle (from 0.4 ns before the rising edge of the clock, until 0.5 ns after).

I don't think my question is really vendor-specific, but I am targeting an Intel Cyclone 10 GX (part 10CX085). I have stripped my whole design down to two flip-flops (pink blocks below) and I still struggle to meet timing, even if I heavily relax the timing constraints:

By closely following Intel's free training course, I understand that for best timing performance I should instantiate a PLL (specifically, an IOPLL - green block above) and my timing constraints should be defined like this:

Code:

# Define input clock parameters

set period 3.333

set tsu 0.4

set th 0.5

# Define input delays

set half_period [expr $period/2]

set in_max_dly [expr $half_period - $tsu]

set in_min_dly [expr $th - $half_period]

# Create virtual launch clock

create_clock -name virtual_clock -period $period

# Create physical base clock (phase shifted by 180 degrees) on FPGA pin

create_clock -name Clk -period $period -waveform "$half_period $period" [get_ports Clk]

# Create generated clocks on the PLL outputs

derive_pll_clocks

# Set input delay constraints

set_input_delay -clock [get_clocks virtual_clock] -max $in_max_dly [get_ports InData*]

set_input_delay -clock [get_clocks virtual_clock] -min $in_min_dly [get_ports InData*]This failed timing catastrophically, so I tried relaxing to a 10 MHz clock with an extremely generous thold = 25 ns:

Code:

# Define input clock parameters

set period 100

set tsu 0.4

set th 25It surprised me that this also failed timing. Since the data is stable for >25 ns and I have told the tools when that stable time will be (relative to the phase of the 10 MHz clock), I thought maybe the tools would be able to adjust delays (and/or PLL phase) to ensure the data is sampled near the middle of the "stable" time.

However, if I manually adjust the phase of the PLL (to basically anything sufficiently larger than zero) then timing is of course met comfortably:

This leaves me very confused about the following:

- Are my requirements (300 MHz, 0.5 ns, 0.4 ns) feasible with this device?

- If so, then how? (Timing contraints, PLL configuration, etc).

- If not, then what performance is possible? (I can't see this information anywhere in the FPGA documentation).

--- Updated ---

The Quartus project is attached...

Attachments

Last edited: