ikorman

Junior Member level 1

Hi all

First of all, I would like to apologize my self for ignorance in basic electronics (I'm more oriented in uC coding and similar), but I need you help here

I want to control RGB led strip with TLC5940 LED driver. TLC5940 is 16 channel PWM LED driver, which uses constant-current sink outputs to control LED. By varying PWM cycle rate it will make LED brighter or darker. There is limit per each channel of around 100 mA. As I want to drive long LED strip which can take up to several amps I need to drive led strip through transistors and this is where I'm stuck....

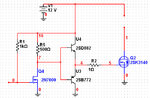

Problem is that I'm not qualified to properly design this drive stage. I have seen solution where TIP122 was used by connecting to TLC output through 1 k resistor and using 10k pull up resistor, but there was complaints that transistor was not able to completely shut of the LED strip (remember that TLC output when active sinks predefined (configurable) current - it was something about not able to get 0V on base of TIP through used resistors). Also with higher PWM frequencies and currents, there could be heating and switching issues.

Therefore I would like to use MOSFET for this. Problem is that I'm don't know how to chose proper MOSFET and which resistors for optimum switching (my head hearts from on/off threshold, input capacitance, turn on/off time). I believe that circuit is quite simple (one or two resistors and n channel MOSFET), but ...:-D

Can somebody help me on this? As I'm planing to use lot of RGB LED segments (in total 32 PWM channels per one device), so I'm trying to keep components number at minimum...

Here are my parameters:

- RGB LED strip is driven by +12V, connected in common anode mode

- PWM freq will be around 1000 Hz, PWM steps 4096

- max current per LED strip/transistor 6A

- current sink on TLC output pin when output active is 10mA

I'm aware that by using transistor I can get inverted PWM signal, but is completely OK as I can adjust this in micro controller code.

Note: although TLC5940 supports also digital control of current per channel (in addition to PWM), I don't need this part (no current mirrors or what ever) - current will be constant (defined by TLC external resistor), I will use only PWM for control.

BR

Ivan

First of all, I would like to apologize my self for ignorance in basic electronics (I'm more oriented in uC coding and similar), but I need you help here

I want to control RGB led strip with TLC5940 LED driver. TLC5940 is 16 channel PWM LED driver, which uses constant-current sink outputs to control LED. By varying PWM cycle rate it will make LED brighter or darker. There is limit per each channel of around 100 mA. As I want to drive long LED strip which can take up to several amps I need to drive led strip through transistors and this is where I'm stuck....

Problem is that I'm not qualified to properly design this drive stage. I have seen solution where TIP122 was used by connecting to TLC output through 1 k resistor and using 10k pull up resistor, but there was complaints that transistor was not able to completely shut of the LED strip (remember that TLC output when active sinks predefined (configurable) current - it was something about not able to get 0V on base of TIP through used resistors). Also with higher PWM frequencies and currents, there could be heating and switching issues.

Therefore I would like to use MOSFET for this. Problem is that I'm don't know how to chose proper MOSFET and which resistors for optimum switching (my head hearts from on/off threshold, input capacitance, turn on/off time). I believe that circuit is quite simple (one or two resistors and n channel MOSFET), but ...:-D

Can somebody help me on this? As I'm planing to use lot of RGB LED segments (in total 32 PWM channels per one device), so I'm trying to keep components number at minimum...

Here are my parameters:

- RGB LED strip is driven by +12V, connected in common anode mode

- PWM freq will be around 1000 Hz, PWM steps 4096

- max current per LED strip/transistor 6A

- current sink on TLC output pin when output active is 10mA

I'm aware that by using transistor I can get inverted PWM signal, but is completely OK as I can adjust this in micro controller code.

Note: although TLC5940 supports also digital control of current per channel (in addition to PWM), I don't need this part (no current mirrors or what ever) - current will be constant (defined by TLC external resistor), I will use only PWM for control.

BR

Ivan