Phrancees69

Newbie level 6

Hi,

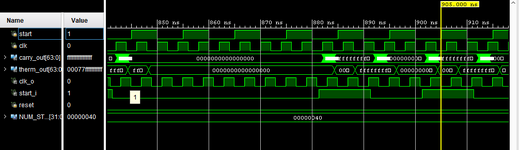

I am working on a TDL TDC using carry4 elements as my delay line. I currently have a delay chain of length 64. The propagation delay of the each carry4 is 118 ps. I have tried to sample the TDL output using D flipflops clocked by 10 ns. The output of the DFFs do not sample correctly (no update on clock's rising edge). I am new to FPGAs and digital designs. I have tried using an LSFR and a wave union tecehnique to supply the start signal but results are no different. Kindly assist. thank you

I am working on a TDL TDC using carry4 elements as my delay line. I currently have a delay chain of length 64. The propagation delay of the each carry4 is 118 ps. I have tried to sample the TDL output using D flipflops clocked by 10 ns. The output of the DFFs do not sample correctly (no update on clock's rising edge). I am new to FPGAs and digital designs. I have tried using an LSFR and a wave union tecehnique to supply the start signal but results are no different. Kindly assist. thank you