melkord

Full Member level 3

My supervisor suggested me to do some performance exploration of a circuit (using optimization feature in Cadence).

So the starting point I have is only the topology without any specific target specification.

The main goal is to find the "border" or limit of performance of this specific circuit.

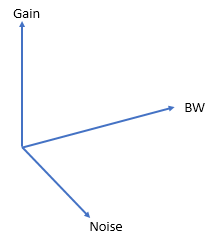

In my mind, I would get something like the picture below.

Gain, BW, and Noise are just an example.

They could be other circuit performance.

Could someone briefly inform me how it is usually done?

I would also really appreciate it if someone can point me to any reference about this.

So the starting point I have is only the topology without any specific target specification.

The main goal is to find the "border" or limit of performance of this specific circuit.

In my mind, I would get something like the picture below.

Gain, BW, and Noise are just an example.

They could be other circuit performance.

Could someone briefly inform me how it is usually done?

I would also really appreciate it if someone can point me to any reference about this.