dirac16

Member level 5

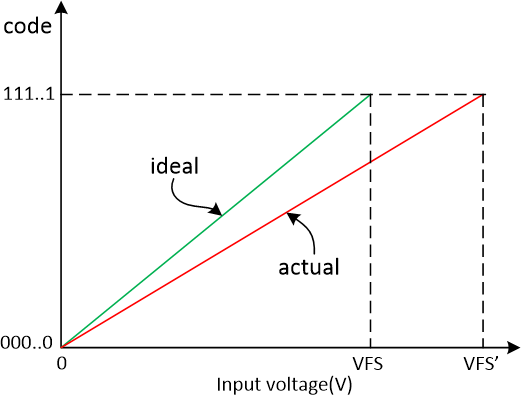

In the following image the actual transfer function falls off because of the negative gain error. What usually is done in software is to compensate for the gain error using best fit line method or other ones as per the designer and the application requirements.

I am working on an ADC where the specs need to be reported before calibration is performed. Unfortunately, the ADC's actual transfer function has significant negative gain error (as shown above) and for that reason I want to measure DNL/INL before doing any a priori calibration (That is to showcase the importance of the post calibration on the measured data). The problem is that I am not sure enough as to how DNL and INL are measured in the presence of the gain error. Particularly, how is the LSB size found in the above figure? Should I only extend the VFS to the actual VFS' and rewrite the LSB size based on VFS'? Then the calculation of the DNL/INL based on the newly updated LSB will be straightforward. Please tell me if I am right.

I am working on an ADC where the specs need to be reported before calibration is performed. Unfortunately, the ADC's actual transfer function has significant negative gain error (as shown above) and for that reason I want to measure DNL/INL before doing any a priori calibration (That is to showcase the importance of the post calibration on the measured data). The problem is that I am not sure enough as to how DNL and INL are measured in the presence of the gain error. Particularly, how is the LSB size found in the above figure? Should I only extend the VFS to the actual VFS' and rewrite the LSB size based on VFS'? Then the calculation of the DNL/INL based on the newly updated LSB will be straightforward. Please tell me if I am right.