whack

Member level 5

Hey all,

I'll try to make it as short as I can. I've got VGA signal generation working on FPGA and I'm playing with a CRT monitor with different resolutions. Now that I've learned how to generate different refresh rates and resolutions, I need to learn how to generate interlaced VGA signal.

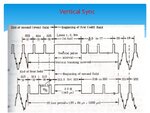

What I need help with is explanation if there are any differences in timings compared to normal ("progressive") VGA scan, and what the waveforms for horizontal and vertical sync signals look like. (Signal polarities...?)

I don't require help with VHDL and Verilog code, I need just theory and waveforms. A good diagram from somewhere would be golden.

Information on interlaced VGA modes is scarce on the Internet, because, well, pretty much nobody used them. I did search before I posted.

Help is appreciated. Thanks!

I'll try to make it as short as I can. I've got VGA signal generation working on FPGA and I'm playing with a CRT monitor with different resolutions. Now that I've learned how to generate different refresh rates and resolutions, I need to learn how to generate interlaced VGA signal.

What I need help with is explanation if there are any differences in timings compared to normal ("progressive") VGA scan, and what the waveforms for horizontal and vertical sync signals look like. (Signal polarities...?)

I don't require help with VHDL and Verilog code, I need just theory and waveforms. A good diagram from somewhere would be golden.

Information on interlaced VGA modes is scarce on the Internet, because, well, pretty much nobody used them. I did search before I posted.

Help is appreciated. Thanks!