T

treez

Guest

Hello,

We are using PIC16F18856 with a 3V Vdd rail.

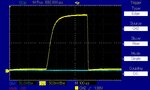

We are using Port RB3 as an input to receive DALI signals, as the attached shows (a close up of part of the pulse stream). The rise time of the received pulse is long, and we wish to know what is the minimum value for logic high? (VIH)..

Page 610 of the PIC16F18856 datasheet states that a logic high voltage varies depending on whether the input pin is either a TTL buffer or a Schmitt trigger buffer.

The INVLB register seems to deal with whether or not the pin is Schmitt trigger or TTL. We obviously want it to be TTL as the VIH value is lower. (ST = 2.4V for VIH; TTL = 1.55V for VIH)

However, do you think we should really choose to declare the DALI RX pin as a I2C pin? (VIH = 2.1V).

It amazes us that there are so many different types of input available for the RB3 port, each one of them having a different VIH. We want lowest VIH but feel that “I2C” is more suitable for a DALI RX pin?

PIC16F18856 Datasheet:

https://ww1.microchip.com/downloads/en/DeviceDoc/40001824B.pdf

We are using PIC16F18856 with a 3V Vdd rail.

We are using Port RB3 as an input to receive DALI signals, as the attached shows (a close up of part of the pulse stream). The rise time of the received pulse is long, and we wish to know what is the minimum value for logic high? (VIH)..

Page 610 of the PIC16F18856 datasheet states that a logic high voltage varies depending on whether the input pin is either a TTL buffer or a Schmitt trigger buffer.

The INVLB register seems to deal with whether or not the pin is Schmitt trigger or TTL. We obviously want it to be TTL as the VIH value is lower. (ST = 2.4V for VIH; TTL = 1.55V for VIH)

However, do you think we should really choose to declare the DALI RX pin as a I2C pin? (VIH = 2.1V).

It amazes us that there are so many different types of input available for the RB3 port, each one of them having a different VIH. We want lowest VIH but feel that “I2C” is more suitable for a DALI RX pin?

PIC16F18856 Datasheet:

https://ww1.microchip.com/downloads/en/DeviceDoc/40001824B.pdf