mrinalmani

Advanced Member level 1

- Joined

- Oct 7, 2011

- Messages

- 463

- Helped

- 60

- Reputation

- 121

- Reaction score

- 58

- Trophy points

- 1,318

- Location

- Delhi, India

- Activity points

- 5,285

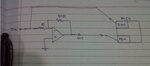

What is the most cost effective way of compensating offset voltage of op-amps. (I have a microcontroller on board, and the use of the same is permitted as it doesn't explicitly add to the cost of a MCU)